Leading cryptocurrency exchange gate io

Web archiving at Westminster

Since its development in the 1990s the web has become a central part of our lives. As historian Ian Milligan argues in his book, History in the Age of Abundance?, it is impossible to imagine writing a history of the present day without reference to the web. At the University, our websites form a vital record of our teaching and research activities and how we have shared them with the community. This blog will look at how we have been working to preserve these records so that we can continue to tell the story of the University into the future.

Why we need to archive the web

Websites may feel permanent but studies have suggested average website lifespans of 100 days or less. Even where sites stay up, content on the web is frequently moved or overwritten, and research by Harvard Law Review found that more than 70% of cited URLs within a selection of legal journals no longer linked to the originally cited information. At the University while key sites like our institutional website are likely to remain up for some time, their content will often be overwritten. Meanwhile smaller sites that reflect a particular research project or activity will often have a much shorter lifespan. Ultimately, the ongoing cost of hosting and maintaining a site means that when a particular project comes to an end or relevant staff move on, an associated site is likely to be shut down. In response to these issues, web archiving aims to capture and preserve, not just the information stored on a site, but also the way in which it was presented and accessed, allowing future researchers to see how it was experienced by users at the time.

How web archiving works: the University website

There are two main approaches to web archiving. Large-scale automatic capture of websites, of the sort done by the Internet Archive or the UK Web Archive, uses software agents called crawlers. A crawler visits an initial page – called a seed – makes an archival copy of the page and searches for any links. The crawler then follows each of the links, captures the pages it finds, searches for links and repeats the process. As such crawls are potentially infinite, they are usually limited in scope by ‘domain’ (for example instructing the crawler to only archive pages from westminster.ac.uk and not follow external links), ‘depth’ (the number of times the crawler continues following links from the original seed), or simply by crawl time or file size.

This is the approach we take for our regular captures of the University’s main website and other key sites. Since 2018, our partners Mirrorweb have crawled the site twice a year and transferred the resulting archive files for us to look after in the university archive’s digital repository. Websites are preserved in a standard archival file format called WARC. With the appropriate software WARC files can then be viewed and interacted with like a normal website.

How web archiving works: rapid response collecting

While crawl-based systems are useful for capturing larger sites, they are technically complex to manage and can be costly. Automatic crawls can also be less effective at capturing complex or dynamic content, especially interactive material. For this reason, the digital arts organisation Rhizome, developed Conifer/Webrecorder, as a solution that would both allow for higher fidelity capture and let individuals and smaller organisations create their own web archives. These systems work by allowing the user to click through the website as if they were using it, recording the interactions between the browser and the site in a WARC file.

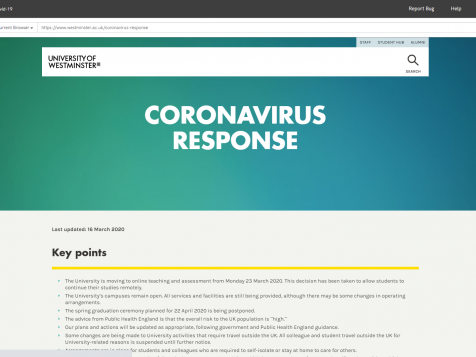

At the University we make use of Conifer/Webrecorder either where we have experienced technical issues with our automated crawls or, more often, when we want to quickly capture content that is in danger of being overwritten or deleted. For example, this is the approach we have taken towards collecting web pages relating to the University’s coronavirus response, which, particularly during the early days of the pandemic, were frequently being overwritten.

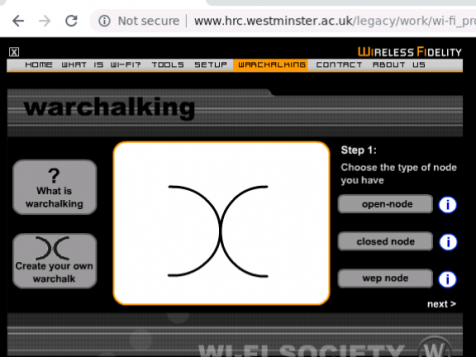

We also typically use Conifer/Webrecorder when we need to capture smaller sites that are due to be decommissioned. Like all potential accessions, sites are first appraised to make sure they fit with our Collection and Acquisition policy. For websites this means that they must be well-used sites that document a key university activity such as teaching or research, or are in themselves of historical interest. Web archives are time consuming to create and use a lot of digital storage so, as with all forms of archiving, it’s not possible to capture everything. A good example of the kind of sites we collect with Conifer are the pages of the Hypermedia Research Centre which conducted innovative research on internet politics and culture at Westminster in the 1990s and early 2000s. These pages are a particularly interesting example of early internet culture, but they also posed a technical challenge for conventional web archiving as they included some examples of student work using Flash. Flash was a software platform that enabled rich interactive web content but which has now been deprecated. Fortunately Conifer provides emulated browsers that support Flash, so we were able to use it to capture the student work along with the rest of the site.

Issues with web archives

As suggested above, one of the first things to recognise is that as with conventional archives, not everything will make it into a web archive. However, even if a site has been archived, researchers also need to keep in mind that no archival copy will be a 100% faithful recreation of the original experience. Websites use a wide variety of underlying technologies, many of which change frequently, making perfect capture and playback impossible. At the University, although we are careful to perform quality assurance on our captures, not all problems can be resolved and you might come across missing images or media and the occasional broken link. Archives will also always, to some extent, be meditated by the software that was used to create them and play them back on the user’s computer. These issues mean that web archives, like all archival sources, should be approached critically. For more advice on using web archives as a researcher, see our libguide on working with digital archives.

If you have your own website or manage one for work, there are steps you can take to make it more ‘archivable’. Fortunately, as web crawlers often work in a similar way to screen readers and other accessibility tools, many of the steps you take to enhance accessibility will also help make your pages easier to archive. The US Library of Congress has a helpful guide on this subject and you can also use the Archive Ready site checker, to get a quick idea of how compatible your site would be with common web archiving systems.

Providing access to web archives can also be challenging and currently, access to web archives held by the University is provided on request. If you are a researcher interested in accessing our archives, please contact us.

Jacob Bickford May 2021